Convert Nginx rule to F5 irule

Hi Team, I need some help in converting the below NGINX Rule to F5 iRule. proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; # WebSocket specific proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "upgrade";66Views0likes3CommentsNGINX Virtual Machine Building with cloud-init

Traditionally, building new servers was a manual process. A system administrator had a run book with all the steps required and would perform each task one by one. If the admin had multiple servers to build the same steps were repeated over and over. All public cloud compute platforms provide an automation tool called cloud-init that makes it easy to automate configuration tasks while a new VM instance is being launched. In this article, you will learn how to automate the process of building out a new NGINX Plus server usingcloud-init.206Views3likes4CommentsGlobal Live Webinar(07/31): Kubernetes Connectivity Evolved: NGINX Gateway Fabric

Kubernetes Connectivity Evolved: NGINX Gateway Fabric Global Live Webinar(07/31): Kubernetes Connectivity Evolved: NGINX Gateway Fabric Date: July 31, 2024 Time: 10:00am PT | 1:00pm ET Speakers: llya Krutov - Product Marketing Manager, F5 NGINX Akash Ananthanarayanan - Technical Marketing Manager, F5 NGINX Mike Stefaniak - Sr. Product Manager, F5 NGINX What's the webinar about? Are you grappling with the intricacies of Ingress controllers and service meshes in Kubernetes? You're not alone. These traditional methods can lead to a variety of challenges including complexity, overhead, and compatibility issues. We're excited to introduce NGINX Gateway Fabric, a groundbreaking platform tool that redefines application delivery within Kubernetes. This unified fabric is crafted to eliminate the headaches associated with app, service, and API connectivity. Our expert speakers will delve into the nuances of deploying NGINX Gateway Fabric, sharing invaluable insights on best practices and self-service governance for robust, secure, and fast connectivity. This session is a must-attend for Platform Architects & Engineers, DevOps teams, and System Administrators who are keen to build a resilient and future-proof Kubernetes environment. 💡 Key Takeaways: Discover the strategic advantages of the Kubernetes Gateway API, independent of Ingress controllers. Learn to seamlessly address complex Kubernetes connectivity scenarios with NGINX Gateway Fabric. Understand the ecosystem's native interoperability, including hands-on integrations with tools like Prometheus and Grafana. Don't miss this opportunity to stay ahead in the Kubernetes sphere. Join us in paving the way for a smarter, more efficient connectivity framework with NGINX Gateway Fabric. To register, click here17Views0likes0CommentsSecuring and Scaling Hybrid Apps with F5/NGINX (Part 3)

In part 2 of our series, I demonstrated how to configure ZT (Zero Trust) use cases centering around authentication with NGINX Plus in hybrid environments. We deployed NGINX Plus as the external LB to route and authenticate users connecting to my Kubernetes applications. In this article, we explore other areas of the ZT spectrum configurable on the External LB Service, including: Authorization and Access Encryption mTLS Monitoring/Auditing ZT Use case #1: Authorization Many people think that authentication and authorization can be used interchangeably. However, they both mean different things. Authentication involves the process of verifying user identities based on the credentials presented. Even though authenticated users are verified by the system, they do not necessarily have the authority to access protected applications. That is where authorization comes into play. Authorization involves the process of verifying the authority of an identity before granting access to application. Authorization in the context of OIDC authentication involves retrieving claims from user ID tokens and setting conditions to validate whether the user is authorized to enter the system. An authenticated user is granted an ID token from the IdP with specific user information through JWT claims. The configuration of these claims is typically set from the IdP. Revisiting the OIDC auth use case configured in the previous section, we can retrieve the ID tokens of authenticated users from the NGINX key-value store. $ curl -i http://localhost:8010/api/9/http/keyvals/oidc_acess_tokens Then we can view the decoded value of the ID token using jwt.io. Below is an example of decoded payload data from the ID token. { "exp": 1716219261, "iat": 1716219201, "admin": true, "name": "Micash", "zone_info": "America/Los_Angeles" "jti": "9f8ff4bd-4857-4e12-9634-e5876f786f98", "iss": "http://idp.f5lab.com:8080/auth/realms/master", "aud": "account", "typ": "Bearer", "azp": "appworld2024", "nonce": "gMNK3tu06j6tp5-jGa3aRhkj4F0P-Z3e04UfcFeqbes" } NGINX Plus has access to these claims as embedded variables. They are accessed by prefixing $jwt_claim_ to the desired field (for example, $jwt_claim_admin for the admin claim). We can easily set conditions on these claims and block unauthorized users before they even reach the back-end applications. Going back to our frontend.conf file in the previous part of our series. We can set $jwt_flag variable to 0 or 1 based on the value of the admin JWT claim. We then use the jwt_claim_require directive to validate the ID token. ID tokens with admin claims set to false will be rejected. map $jwt_claim_admin $jwt_status { "true" 1; default 0; } server { include conf.d/openid_connect.server_conf; # Authorization code flow and Relying Party processing error_log /var/log/nginx/error.log debug; # Reduce severity level as required listen [::]:443 ssl ipv6only=on; listen 443 ssl; server_name example.work.gd; ssl_certificate /etc/ssl/nginx/default.crt; # self-signed for example only ssl_certificate_key /etc/ssl/nginx/default.key; location / { # This site is protected with OpenID Connect auth_jwt "" token=$session_jwt; error_page 401 = @do_oidc_flow; auth_jwt_key_request /_jwks_uri; # Enable when using URL auth_jwt_require $jwt_status; proxy_pass https://cluster1-https; # The backend site/app } } Note: Authorization with NGINX Plus is not restricted to only JWT tokens. You can technically set conditions on a variety of attributes, such as: Session cookies HTTP headers Source/Destination IP addresses ZT use case #2: Mutual TLS Authentication (mTLS) When it comes to ZT, mTLS is one of the mainstream use cases falling under the Zero Trust umbrella. For example, enterprises are using Service Mesh technologies to stay compliant with ZT standards. This is because Service Mesh technologies aim to secure service to service communication using mTLS. In many ways, mTLS is similar to the OIDC use case we implemented in the previous section. Only here, we are leveraging digital certificates to encrypt and authenticate traffic. This underlying framework is defined by PKI (Public Key Infrastructure). To explain this framework in simple terms we can refer to a simple example; the driver's license you carry in your wallet. Your driver’s license can be used to validate your identity, the same way digital certificates can be used to validate the identity of applications. Similarly, only the state can issue valid driver's licenses, the same way only Certificate Authorities (CAs) can issue valid certificates to applications. It is also important that only the state can issue valid certificates. Therefore, every CA must have a private secure key to sign and issue valid certificates. Configuring mTLS with NGINX can be broken down in two parts: Ingress mTLS; Securing SSL client traffic and validating client certificates against a trusted CA. Egress mTLS; securing SSL upstream traffic and offloading authentication of TLS material to a trusted HTTPS back-end server. Ingress mTLS You can configure ingress mTLS on the NLK deployment by simply referencing the trusted certificate authority adding the ssl_client_certificate directive in the server context. This will configure NGINX to validate client certificates with the referenced CA. Note: If you do not have a CA, you can create one using OpenSSL or Cloudflare PKI and TLS toolkits server { listen 443 ssl; status_zone https://cafe.example.com; server_name cafe.example.com; ssl_certificate /etc/ssl/nginx/default.crt; ssl_certificate_key /etc/ssl/nginx/default.key; ssl_client_certificate /etc/ssl/ca.crt; } Egress mTLS Egress mTLS is a slight alternative to ingress mTLS where NGINX verifies certificates of upstream applications rather than certificates originating from clients. This feature can be enabled by adding the proxy_ssl_trusted_certificate directive to the server context. You can reference the same trusted CA we used for verification when configuring ingress mTLS or reference a different CA. In addition to verifying server certificates, NGINX as a reverse-proxy can pass over certs/keys and offload verification to HTTPS upstream applications. This can be done by adding the proxy_ssl_certificate and proxy_ssl_certificate_key directives in the server context. server { listen 443 ssl; status_zone https://cafe.example.com; server_name cafe.example.com; ssl_certificate /etc/ssl/nginx/default.crt; ssl_certificate_key /etc/ssl/nginx/default.key; #Ingress mTLS ssl_client_certificate /etc/ssl/ca.crt; #Egress mTLS proxy_ssl_certificate /etc/nginx/secrets/default-egress.crt; proxy_ssl_certificate_key /etc/nginx/secrets/default-egress.key; proxy_ssl_trusted_certificate /etc/nginx/secrets/default-egress-ca.crt; } ZT use case #3: Secure Assertion Markup Language (SAML) SAML (Security Assertion Markup Language) is an alternative SSO solution to OIDC. Many organizations may choose between SAML and OIDC depending on requirements and IdPs they currently run in production. SAML requires a SP (Service Provider) to exchange XML messages via HTTP POST binding to a SAML IdP. Once exchanges between the SP and IdP are successful, the user will have session access to the protected backed applications with one set of user credentials. In this section, we will configure NGINX Plus as the SP and enable SAML with the IdP. This will be like how we configured NGINX Plus as the relying party in an OIDC authorization code flow (See ZT Use case #1). Setting up the IdP The one prerequisite is setting up your IdP. In our example, we will set up the Microsoft Entra ID on Azure. You can use the SAML IdP of your choosing. Once the SAML application is created in your IdP, you can access the SSO fields necessary to link your SP (NGINX Plus) to your IdP (Microsoft Entra ID). You will need to edit the basic SAML configuration by clicking on the pencil icon next to Editin Basic SAML Configuration, as seen in the figure above. Add the following values and click Save: Identifier (Entity ID) -- https://fourth.run.place Reply URL (Assertion Consumer Service URL) -- https://fourth.run.place/saml/acs Sign on URL: https://fourth.run.place Logout URL (Optional): https://fourth.run.place/saml/sls Finally download the Certificate (Raw) from Microsoft Entra ID and save it to your NGINX Plus instance. This certificate is used to verify signed SAML assertions received from the IdP. Once the certificate is saved on the NGINX Plus instance, extract the public key from the downloaded certificate and convert it to SPKI format. We will use this certificate later when we configure NGINX Plus in the next section. $ openssl x509 -in demo-nginx.der -outform DER -out demo-nginx.der $ openssl x509 -inform DER -in demo-nginx.der -pubkey -noout > demo-nginx.spki Configuring NGINX Plus as the SAML Service Provider After the IdP is setup, we can configure NGINX Plus as the SP to exchange and validate XML messages with the IdP. Once logged into the NGINX Plus instance, simply clone the nginx SAML GitHub repo. $ git clone https://github.com/nginxinc/nginx-saml.git && cd nginx-saml Copy the config files into the /etc/nginx/conf.d directory. $ cp frontend.conf saml_sp.js saml_sp.server_conf saml_sp_configuration.conf /etc/nginx/conf.d/ Notice that by default, frontend.conf listens on port 8010 with clear text http. You can merge kube_lb.conf into frontend.conf to enable TLS termination and update the upstream context with application endpoints you wish to protect with SAML. Finally we will need to edit the saml_sp_configuration.conf file and update variables in the map context based on the parameters of your SP and IdP: $saml_sp_entity_id; https://fourth.run.place $saml_sp_acs_url; https://fourth.run.place/saml/acs $saml_sp_sign_authn; false $saml_sp_want_signed_response; false $saml_sp_want_signed_assertion; true $saml_sp_want_encrypted_assertion; false $saml_idp_entity_id; Unique identifier that identifies the IdP to the SP. This field is retrieved from your IdP $saml_idp_sso_url; This is the login URL and is also retrieved from the IdP $saml_idp_verification_certificate; Variable referencing the certificate downloaded from the previous section when setting up the IdP. This certificate will verify signed assertions received from the IdP. Use the full directory (/etc/nginx/conf.d/demo-nginx.spki) $saml_sp_slo_url; https://fourth.run.place/saml/sls $saml_idp_slo_url; This is the logout URL retrieved from the IdP $saml_sp_want_signed_slo; true The remaining variables defined in saml_sp_configuration.conf can be left unchanged, unless there is a specific requirement for enabling them. Once the variables are set appropriately, we can reload NGINX Plus. $ nginx -s reload Testing Now we will verify the SAML flow. open your browser and enter https://fourth.run.place in the address bar. This should redirect me to the IDP login page. Once you login with your credentials, I should be granted access to my protected application ZT use case #4: Monitoring/Auditing NGINX logs/metrics can be exported to a variety of 3rd party providers including: Splunk, Prometheus/Grafana, cloud providers (AWS CloudWatch and Azure Monitor Logs), Datadog, ELK stack, and more. You can monitor NGINX metrics and logs natively with NGINX Instance Manager or NGINX SaaS. The NGINX Plus API provides me a lot of flexibility by exporting metrics to any third-party tool that accepts JSON. For example, you can export NGINX Plus API metrics to our native real-time dashboard from part 1. native real-time dashboard from part 1 Whichever tool I chose, monitoring/auditing my data generated from my IT systems is key to understanding and optimizing my applications. Conclusion Cloud providers offer a convenient way to expose Kubernetes Services to the internet. Simply create Kubernetes Service of type: LoadBalancer and external users connect to your services via public entry point. However, cloud load balancers do nothing more than basic TCP/HTTP load balancing. You can configure NGINX Plus with many Zero Trust capabilities as you scale out your environment to multiple clusters in different regions, which is what we will cover in the next part of our series.159Views2likes0CommentsF5 Certified Administrator NGINX certification now available!

F5 Certified Administrator NGINX certification now available! The F5 Certification team is thrilled to announce that the first official F5 NGINX certification is now live! Introducing the F5 Certified Administrator NGINX certification. The NGINX Certification validates a candidate's understanding of how NGINX works and their ability to perform basic functions related to the administration of NGINX acting as a web and proxy server. To achieve the F5 Certified Administrator NGINX certification, candidates must be a part of the F5 Certification program and pass all four of the following NGINX exams: NGINX Management NGINX Configuration: Knowledge NGINX Configuration: Demonstrate NGINX Troubleshooting About the NGINX certification exams: Each exam contains 30 questions and is 30 minutes long. Each exam costs $50 USD. Each exam is delivered online through Certiverse and can be taken in any order. Preparation for the NGINX Certification exams begins with theNGINX OSS Blueprint. The NGINX certification is based on NGINX Open Source Software, not NGINX+. NGINX Certification FAQs. You can registerherefor the F5 Certification program to schedule NGINX exams. Note*: This link takes you to a non-F5 site where you will need to establish an account login if you haven not already done so.* Go toF5 Certification Program site for additional NGINX training resources. For additional information, refer to theNGINX Certification FAQpage. If you have additional questions, please emailsupport@mail.education.f5.com And check out Buu Lam's conversation with Dylan Thomas, one of the first people to be NGINX Certified, to learn about how to prepare for the exam. 67Views0likes0Comments

67Views0likes0CommentsF5 XC vk8s workload with Open Source Nginx

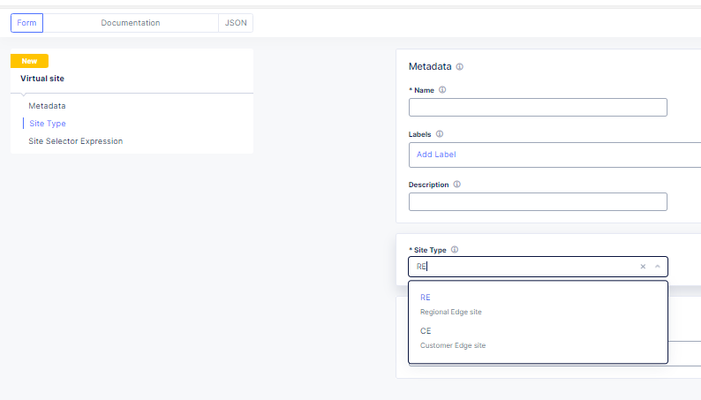

I have shared the code in the link below under Devcentral code share: F5 XC vk8s open source nginx deployment on RE | DevCentral Here I will desribe the basic steps for creating a workload object that is F5 XC custom kubernetes object that creates in the background kubernetes deployments, pods and Cluster-IP type services. The free unprivileged nginx image nginxinc/docker-nginx-unprivileged: Unprivileged NGINX Dockerfiles (github.com) Create a virtual site that groups your Regional Edges and Customer Edges. After that create the vk8s virtual kubernetes and relate it to the virtual site."Note": Keep in mind for the limitations of kubernetes deployments on Regional Edges mentioned in Create Virtual K8s (vK8s) Object | F5 Distributed Cloud Tech Docs. First create the workload object and select type service that can be related to Regional Edge virtual site or Customer Edge virtual site. After select the container image that will be loaded from a public repository like github or private repo. You will need to configure advertise policy that will expose the pod/container with a kubernetes cluster-ip service. If you are deploying test containers, you will not need to advertise the container . To trigger commands at a container start, you may need to use /bin/bash -c -- and a argument."Note": This is not related for this workload deployment and it is just an example. Select to overwrite the default config file for the opensource nginx unprivileged with a file mount."Note": the volume name shouldn't have a dot as it will cause issues. For the image options select a repository with no rate limit as otherwise you will see the error under the the events for the pod. You can also configure command and parameters to push to the container that will run on boot up. You can use empty dir on the virtual kubernetes on the Regional Edges for volume mounts like the log directory or the Nginx Cache zone but the unprivileged Nginx by default exports the logs to the XC GUI, so there is no need. "Note": This is not related for this workload deployment and it is just an example. The Logs and events can be seen under the pod dashboard and even the container/pod can accessed. "Note": For some workloads to see the logs from the XC GUI you will need to direct the output to stderr but not for nginx. After that you can reference the auto created kubernetes Cluster-IP service in a origin pool, using the workload name and the XC namespace (for example niki-nginx.default). "Note": Use the same virtual-site where the workload was attached and the same port as in the advertise cluster config. Deployments and Cluster-IP services can be created directly without a workload but better use the workload option. When you modify the config of the nginx actually you are modifying a configmap that the XC workload has created in the background and mounted as volume in the deployment but you will need to trigger deployment recreation as of now not supported by the XC GUI. From the GUI you can scale the workload to 0 pod instances and then back to 1 but a better solution is to use kubectl. You can log into the virtual kubernetes like any other k8s environment using a cert and then you can run the command "kubectl rollout restart deployment/niki-nginx". Just download the SSL/TLS cert. You can automate the entire process using XC API and then you can use normal kubernetes automation to run the restart command F5 Distributed Cloud Services API for ves.io.schema.views.workload | F5 Distributed Cloud API Docs! Related resources: For nginx Plus deployments for advanced functions like SAML or OpenID Connect (OIDC) or the advanced functions of the Nginx Plus dynamic modules like njs that is allowing java scripting (similar to F5 BIG-IP or BIG-IP Next TCL based iRules), see: Enable SAML SP on F5 XC Application Bolt-on Auth with NGINX Plus and F5 Distributed Cloud Dynamic Modules | NGINX Documentation njs scripting language (nginx.org)268Views2likes1Comment